Service hotline

+86 0755-83044319

release time:2023-09-06Author source:SlkorBrowse:12266

The exponential spread of ChatGPT

ChatGPT was released by OpenAI on November 30, 2022, and quickly gained popularity worldwide. It reached 100 million active users in a record-breaking time, taking Facebook 54 months, X (old Twitter) 49 months, Instagram 30 months, LINE 19 months, and TikTok 9 months, while ChatGPT achieved this milestone in just two months.

Its performance also improved rapidly. In January 2023, ChatGPT's answers in an MBA final exam were rated as a passing grade (B), and in February of the same year, its accuracy rate in the US medical licensing examination reached the pass line. In March, there were reports that GPT-4 achieved a top 10% score in the May US bar exam, reaching a level comparable to passing the Japanese national physician exam in the past five years.

The advent of generative AI, starting with ChatGPT, sparked an endless wave of excitement, leading high-tech companies to embark on developing generative AI. This generation of AI utilizes a semiconductor called a Graphics Processing Unit (GPU). NVIDIA holds a monopoly position in the GPU field.

Here, generative artificial intelligence refers to AI systems that can generate content such as text, images, or even music. These systems are designed to mimic human creativity by generating new outputs based on patterns and examples learned from training data. They work by utilizing neural networks, specifically using models like GPT (Generative Pre-trained Transformer), which is a type of deep learning architecture.

GPUs play a crucial role in the training and inference processes of generative AI. They are highly efficient in parallel processing, allowing for faster computations required by neural networks. GPUs excel at handling large amounts of data simultaneously, speeding up the training and generation of AI models. NVIDIA, being a leading manufacturer of GPUs, has established a dominant position in the field due to their powerful hardware optimized for AI workloads.

ChatGPT has two steps

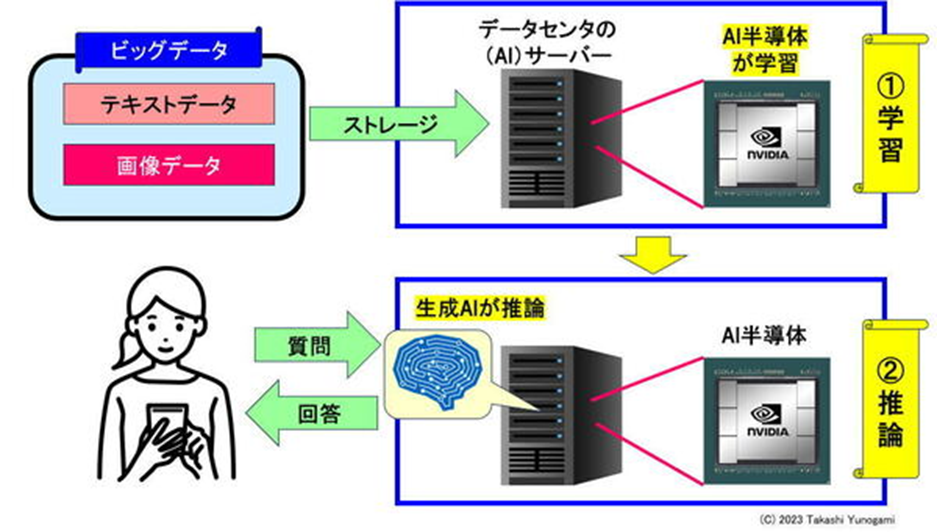

Figure 5 explains the mechanism of generative AI, such as ChatGPT. The process of generative artificial intelligence can be divided into two stages: learning and inference.

Figure 5 illustrates the principle of generative AI and the AI semiconductor used (NVIDIA GPU)

First, the text data, image data, and other big data from the internet are loaded into servers equipped with AI semiconductors such as NVIDIA GPUs (referred to as AI servers hereafter). At that time, AI semiconductors like GPUs will learn from the data.

Then, when users write down questions during a chat, the generative AI running on the AI server will perform reasoning and provide answers. During this process, the inference is carried out on AI semiconductors such as NVIDIA GPUs installed on the AI server.

From this, we can see that generative AI can be considered as "software-like" and runs on AI semiconductors (such as NVIDIA GPUs) installed on AI servers.

The dissemination and expansion of generative AI like ChatGPT are unlimited. As a result, the shortage of NVIDIA GPUs in the semiconductor market is becoming increasingly severe. In this situation, high-tech companies developing generative AI are competing to collect as many NVIDIA GPUs as possible.

NVIDIA GPUs come in various types, but the most sought-after ones are [敏感词]'s 7nm A100 (priced at $10,000 each) and H100 (priced at $40,000 each). Regardless of their high prices, considering that individual DRAM chips cost $10, Apple's iPhone processors cost $100, and Intel's PC processors cost $200, I have never seen GPU costs ranging from $10,000 to $40,000. There are no exorbitantly expensive indications.

The rapid expansion of data centers has increased the demand for GPUs in AI servers

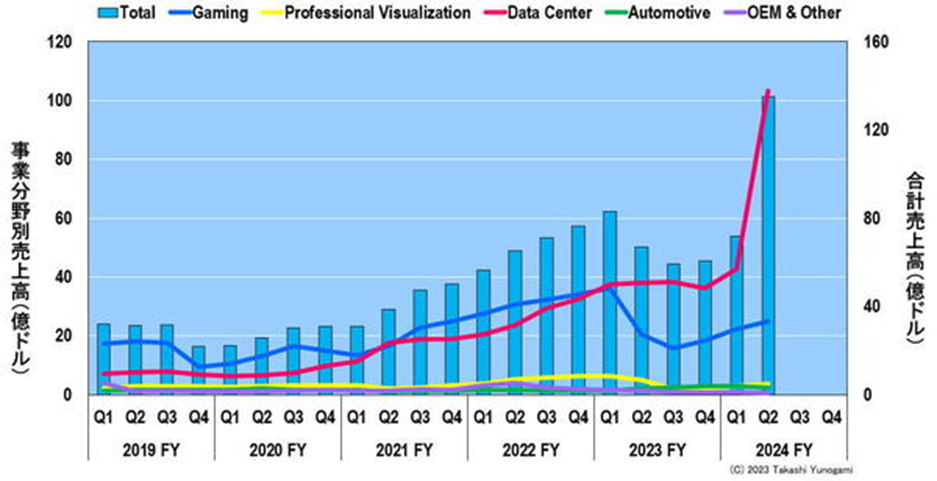

Figure 6 illustrates NVIDIA's quarterly revenue categorized by business segments. Initially, NVIDIA's GPUs were developed for gaming consoles. As shown in Figure 6, it can be observed that until around the fiscal year 2020 (corresponding to the actual year 2019), gaming GPU sales remained the largest.

Figure 6 shows NVIDIA's quarterly revenue divided by business segments

In this context, it was found that GPUs capable of processing a large number of images in parallel are best suited as AI semiconductors. From my memory, around 2016 to 2018, NVIDIA GPUs were often used in AI semiconductors for autonomous driving cars.

However, as shown in Figure 6, the sales of GPUs for cars were not that significant. The reason for this is that fully automated driving has not yet become widespread, and autonomous driving pioneers such as Tesla have already begun developing their own AI semiconductors for fully automated driving cars.

Starting from around the fiscal year 2023 (actually 2022), the sales of GPUs used for data center AI servers within NVIDIA will rapidly increase. The sales of GPUs for AI servers will experience explosive growth in the fiscal year 2024 (actually 2023).

Driven by the surge in demand for AI server GPUs, NVIDIA's revenue has surpassed that of Intel and Samsung and is approaching that of [敏感词]. If this trend continues, [敏感词] may be overtaken.

Since 2010, the three semiconductor giants with high sales: Intel, Samsung, and [敏感词], have been called the "three pillars." However, NVIDIA has suddenly joined the ranks of the top contenders. In the future, NVIDIA may become the top semiconductor sales company. Apparently, NVIDIA's time has come.

Site Map | 萨科微 | 金航标 | Slkor | Kinghelm

RU | FR | DE | IT | ES | PT | JA | KO | AR | TR | TH | MS | VI | MG | FA | ZH-TW | HR | BG | SD| GD | SN | SM | PS | LB | KY | KU | HAW | CO | AM | UZ | TG | SU | ST | ML | KK | NY | ZU | YO | TE | TA | SO| PA| NE | MN | MI | LA | LO | KM | KN

| JW | IG | HMN | HA | EO | CEB | BS | BN | UR | HT | KA | EU | AZ | HY | YI |MK | IS | BE | CY | GA | SW | SV | AF | FA | TR | TH | MT | HU | GL | ET | NL | DA | CS | FI | EL | HI | NO | PL | RO | CA | TL | IW | LV | ID | LT | SR | SQ | SL | UK

Copyright ©2015-2025 Shenzhen Slkor Micro Semicon Co., Ltd